Last week I recorded a video walking through how to connect your LLM to a database and ask it questions about your data. This article is a companion to that video.

Have you ever had someone ask about data and run a simple query for them? The only thing stopping the person asking for that data is knowing SQL (well, and probably access). Or have you ever needed to find something in the DB and weren't sure exactly where to start with finding that data?

The use cases here are many, but now that MCP is picking up steam, building LLM's to handle these sorts of use cases has become much easier than it was previously.

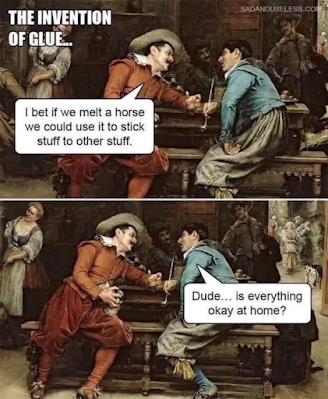

So, what did you have to do "previously" to make this work? Well, back when Agentic AI was starting out, you had your LLMs. Those LLMs were good at LLM things. Then you had your database which was good at being a database. Then you had your APIs which handled requests. The problem was, these things didn't really know about each other. While it's easy to connect a DB to an API service, connecting a DB to an LLM required a lot of glue code.

If you wanted your LLM to do anything more than just be an LLM, you had to write glue code for it to work. DB access, API queries, internet lookups, all required glue code.

With all this glue, things got pretty sticky... Okay, that one's bad. But one of the big problems was there was no standard way to connect an LLM to a DB or an API. Each company, each project hooked these things up in a different way.

For companies like Anthropic, that became a big deal. Big enough that they created and published the Model Context Protocol. While it went under the radar for the first 5 or so months of its existence, Google, OpenAI, Microsoft, and others are all backing it and implementing the protocol.

Since then, the glue has slowly been disappearing and many MCP clients and servers have been gaining momentum.

So, let's use one! Let's connect an LLM to a DB and see how simple it is now to do that.

Before you begin this quest, let's talk about what we'll do.

First, we will set up our local environment to run a model, run a DB, and run an MCP to connect the two.

Second, we will test it out.

Third, we will improve the prompts to help us get better results.

Local Setup

Let's get you set up and running.

First, and this goes without saying, but you'll need a computer. One of those magic electricity boxes. Preferably a new-ish one with a decent amount of RAM because we're going to be asking it to do some heavy lifting. The laptops I've been doing my work on have 64 GB of RAM, but you should be fine with 32. Possibly even less, but you may need to use smaller models below that point.

Ollama

The second thing we want to do is install Ollama on that thing. Head to their downloads page and install the version you need.

Once you've installed Ollama, take a gander at their models. We're particularly interested in the models that can use Tools. Pick one of those and then download it. You'll do that by running a command like this one:

ollama pull qwen3

With that model downloaded, you're ready to move on the to setting up the database.

Database

Now, you may already have a database you're interested in connecting to. If that's the case, you can skip this step.

For this demo, we'll connect to a demo DB that's publicly availably. The one we'll use is the dvdrentals DB that's already in a PostgreSQL Docker container for us. You can find it at the following URL: https://hub.docker.com/r/arcadeanalytics/postgres-dvdrental

You'll want to pull that image and run it with port forwarding for port 5432 like so:

docker run --name dvdrental -e POSTGRES_PASSWORD=postgres -p 5432:5432 -d arcadeanalytics/postgres-dvdrental

Once that's running, you're good to move on to setting up your MCP client.

Fast Agent

The MCP client that we'll use for this demo is fast-agent. It's a lightweight, easy-to-use library that lets you configure agentic AI workflows and leverage MCPs and other tools. To run fast-agent, you'll need to have Python 3 installed. After installing Python, you'll want to install uv. You'll do that by running this command:

*Nix:

curl -LsSf https://astral.sh/uv/install.sh | sh

Windows:

powershell -ExecutionPolicy ByPass -c "irm https://astral.sh/uv/install.ps1 | iex"

Finally, you can now install fast-agent.

uv pip install fast-agent-mcp

You'll also want to run the setup:

uv run fast-agent setup

With that, you're almost there. There are two things we need to configure with fast-agent before you can get going.

The first is which model to use. By default, fast-agent will use one of Anthropic's models. That's not a problem, but that does mean you'll need an Anthropic API key as well as some credits in your account (minimum is $5 worth) to use it. For this example, let's use the local model we downloaded earlier. To do that, you'll open the fastagent.config.yml file and change the default_model to generic.qwen3:latest or generic.<your_model>:<version> if you chose a different model. This will tell fast-agent to look to your local Ollama model instead of reaching out to Anthropic.

PostgreSQL MCP

The last thing we want to set up is the Postgres MCP. You'll stay in that same fastagent.config.ym file to do this. Scroll to the bottom and you'll see an mcp section. At the bottom of that section, you'll want to add the following:

This will allow you to connect an LLM to the DB we have running.

Putting it all together

With that, now can set up our agent so that we can query the DB. To do this, we'll open agent.py and make one small change. You'll change line 9 from this:

@fast.agent(instructions="You are a helpful assistant.")

to this:

@fast.agent(instructions="You are a helpful assistant.", servers=["postgres"])

Adding the servers=["postgres"] tells fast-agent that we want to let this LLM use that MCP.

And now you can run your agent and query the DB!

uv run agent.py

You can ask it a question like "How many films have been rented?" and see how it does.

You many notice that it has to try a few times to get the right query. Is there something we can do to help it? You bet there is! We can update the instructions field (which is essentially the system prompt) to include additional context about what the agent should do and details about the database.

I invite you to play around with this prompt to see what works well and what doesn't. Here's one prompt that I used that seemed to help the LLM know how to query and respond:

For more of a demo of this in action, I invite you to watch the video I linked above.

But there you have it! You can now query your database using natural language! There are a number of valuable, real-world use cases for this. If you have questions or comments, please drop them down below. Have fun playing with this!

Comments

Post a Comment